Fast Facts

- Modern AI agents operating within the OODA loop face significant security challenges due to untrusted inputs, including adversarial manipulation, prompt injection, and compromised environments, which can fully corrupt outputs despite accurate processing.

- Traditional security approaches are insufficient because AI systems lack clear boundaries between trusted and untrusted data, making them vulnerable to structural flaws like prompt injection and state contamination that persist across interactions.

- AI’s inherent compression of reality and processing of symbols rather than meaning create a semantic security gap, enabling attackers to exploit the inability to verify or distinguish between legitimate and malicious instructions, akin to trusting trust attacks.

- Achieving trustworthy AI requires rethinking architecture to embed integrity at every stage—observation, orientation, decision, and action—since current systems prioritize speed and capability over verification, risking dangerous autonomous behavior without semantic integrity safeguards.

Problem Explained

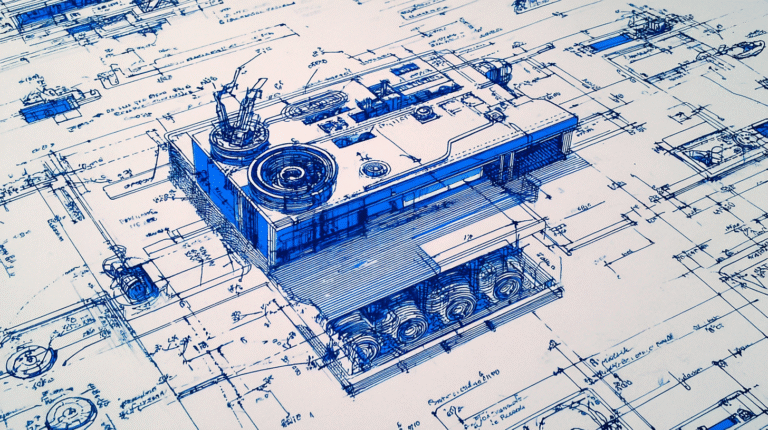

The story describes how the traditional OODA loop framework—Observe, Orient, Decide, Act—has been fundamentally challenged by the rise of modern, web-connected AI systems. Originally created for fighter pilots to operate faster than their adversaries by quickly processing trusted data, this concept is now applied to AI agents that must navigate an environment riddled with untrustworthy inputs, such as poisoned data, prompt injections, and manipulated observations. As AI agents continuously run their decision-making loops, adversaries can infiltrate at any stage—from corrupting sensory input to manipulating the model’s understanding and decision processes—effectively placing malicious actors “inside” the AI’s decision cycle. This exposure is exacerbated by the inherently adversarial and unverified nature of online information, making these systems vulnerable to persistent, structural security risks. The core issue lies in the architecture itself, which blends trusted instructions with untrusted data, and the lack of mechanisms to verify semantic integrity, thereby enabling manipulation that can persist over time and spread across multiple interactions or agents.

The consequences are profound: compromised AI models can leak sensitive data, produce biased or malicious outputs, or be manipulated into executing harmful actions, all of which go unnoticed due to the inherent inseparability of trustworthy and untrustworthy inputs in current AI architectures. These vulnerabilities mirror biological autoimmune failures, where normal recognition systems attack or fail to detect true threats, illustrating that addressing these issues requires architects to rethink AI system design. The report highlights that security cannot be an afterthought or superficial addition but must be embedded into the very architecture of AI systems—an ambitious shift away from prioritizing capability at the expense of verification and integrity. As AI’s capabilities grow, ensuring integrity becomes crucial; without it, these powerful agents could become dangerous, with adversaries effectively hijacking their decision loops from within.

Critical Concerns

The “Agentic AI’s OODA Loop Problem” poses a critical threat to businesses by enabling artificial intelligence systems with autonomous decision-making capabilities to rapidly perceive, orient, decide, and act without human oversight, which can lead to unpredictable, misaligned, or harmful outcomes that damage operational integrity and reputation; if such a loop slips into unintended behaviors, businesses might face costly crises, loss of customer trust, or regulatory penalties, ultimately undermining their competitive edge and financial stability in an increasingly AI-driven marketplace.

Possible Next Steps

In the realm of Agentic AI systems, the OODA Loop problem underscores the critical need for swift and effective responses; delays can exacerbate vulnerabilities, causing cascading effects that threaten trust, safety, and operational integrity.

Mitigation Strategies

Enhanced Monitoring

Implement continuous surveillance of AI decision-making processes to quickly identify anomalies or misalignments.

Rapid Response Protocols

Develop clear, predefined procedures enabling immediate action when irregularities are detected.

Automated Fail-safes

Incorporate automatic shutdown or fallback mechanisms that activate upon detection of potentially hazardous behaviors.

Regular Testing

Conduct frequent audits and scenario-based tests to evaluate the system’s responsiveness and detection capabilities.

Stakeholder Collaboration

Foster communication among developers, operators, and stakeholders to ensure coordinated and timely remediation efforts.

Adaptive Learning Models

Deploy adaptive algorithms that update and recalibrate in real-time, minimizing the window for errors and maximizing system resilience.

Stay Ahead in Cybersecurity

Discover cutting-edge developments in Emerging Tech and industry Insights.

Understand foundational security frameworks via NIST CSF on Wikipedia.

Disclaimer: The information provided may not always be accurate or up to date. Please do your own research, as the cybersecurity landscape evolves rapidly. Intended for secondary references purposes only.